Intro

Hi, I am Shravan and this page describes the details of my Google Summer of Code 2020 project with CERN-HSF. The complete code can be found here. I primarily worked on CMSSW (CMS Software) Framework. This project focuses on the integration of E2E code with CMSSW inference engine for use in reconstruction algorithms in offline and high-level trigger systems of the CMS experiment.

CMS Experiment

The CMS apparatus was identified, a few years before the start of the LHC operation at CERN, to feature properties well suited to particle-flow (PF) reconstruction: a highly-segmented tracker, a fine-grained electromagnetic calorimeter, a hermetic hadron calorimeter, a strong magnetic field, and an excellent muon spectrometer. One of the important aspects of searches for new physics at the Large Hadron Collider (LHC) involves the identification and reconstruction of single particles, jets in collision events in the collision experiment. The Compact Muon Solenoid Collaboration (CMS) uses a particle flow (PF) reconstruction approach that converts raw detector data into progressively physically-motivated quantities until arriving at particle-level data. Such high-level features are the result of a rule-based casting of the raw detector data into progressively more physically-motivated quantities. While such approaches have proven to be useful, they are highly dependent on our ability to completely and effectively model all aspects of particle decay phenomenology.

From particle identification to the discovery of the Higgs boson, deep learning algorithms have become an increasingly important tool for data analysis at the Large Hadron Collider (LHC). Powerful image-based Convolutional Neural Network (CNN) algorithms have emerged, capable of training directly on raw data, learning the pertinent features unassisted - so-called end-to-end deep learning classifiers. We present an innovative end-to-end deep learning approach for the reconstruction of single particles, jets and event topologies. The final model of End-to-End Deep Learning will be implemented on CMSSW (CMS Software) inference engine. The inference of the developed model will also be implemented on GPUs for faster computations. We will develop the deep learning models and train and implement them on simulated data from the Electromagnetic Calorimeter (ECAL) of the CMS detector at the LHC.

In this project, we have applied the E2E approach to the discrimination of single particles like electron vs photon, quark vs gluon as well as jet classification for top quarks and integrated the E2E inference with CMSSW framework for production uses.

CMSSW Framework

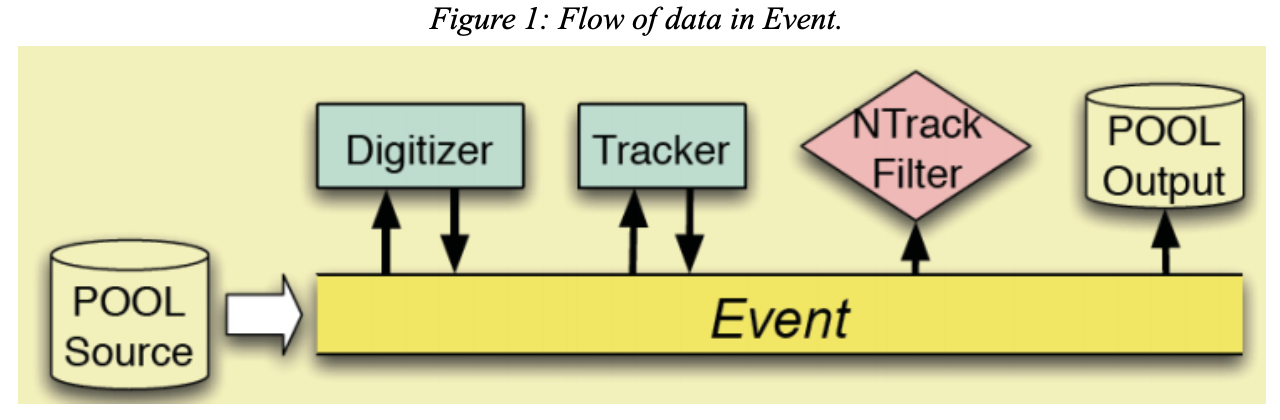

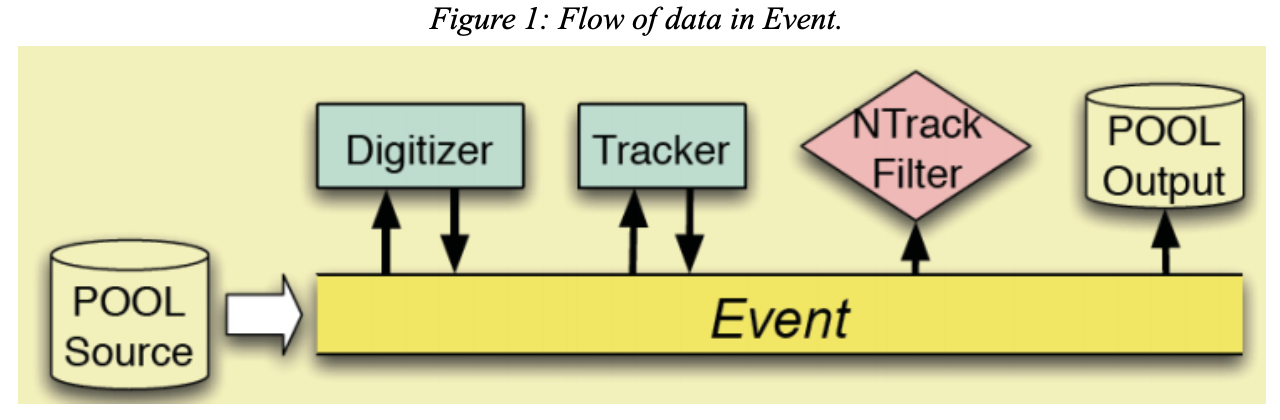

(source)

It is the overall collection of software built around a framework, an Event Data Model (EDM), and Services needed by the simulation, calibration and alignment, and reconstruction modules that process event data so that physicists can perform analysis. The primary goal of the Framework and EDM is to facilitate the development and deployment of reconstruction and analysis software. The CMSSW event processing model consists of many plug-in modules which are managed by the Framework. All the code needed in the event processing (calibration, reconstruction algorithms etc.) is contained in the modules. These can be used for both detector and Monte Carlo data. Some of these modules are:

EDProducer: creates new data to be placed in the Event.

EDAnalyzer: studies properties of the Event.

EDFilter: decides if processing should continue on a path for an Event.

OutputModule: Stores the data from the Event.

The CMS Event Data Model (EDM) is centered around the concept of Event. An Event is a C++ object container for all RAW and reconstructed data related to a particular collision. The data are passed from one module to the next via the Event, and are accessed only through the Event. This flow of Data may be better understood by the above diagram. All the objects in theEvent may be individually or collectively stored in ROOT Files.

Workflow

Data Format:

Various successive operations refine the raw data from the online system. They apply calibrations and create higher level physics objects. CMS uses a variety of data formats of detail, size, and refinement to write this data in its various stages. A data format is essentially C++ class, where a class defines a data structure (a data type with data members). Data is added to an Event using the EDProducer module. Event information from each step in the stimulation and reconstruction chain is logically grouped into a data-tier. RECO (Reconstructed) Data is an example of Data-tier. It contains objects from all stages of reconstruction and is derived from RAW data and provides access to reconstructed physics objects for physics analysis in a convenient format. In my project I have mainly used the objects of RECO data-tier and after processing the data, running the inference, the predictions along with other objects (data frames) are added to the event using EDProducer.

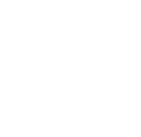

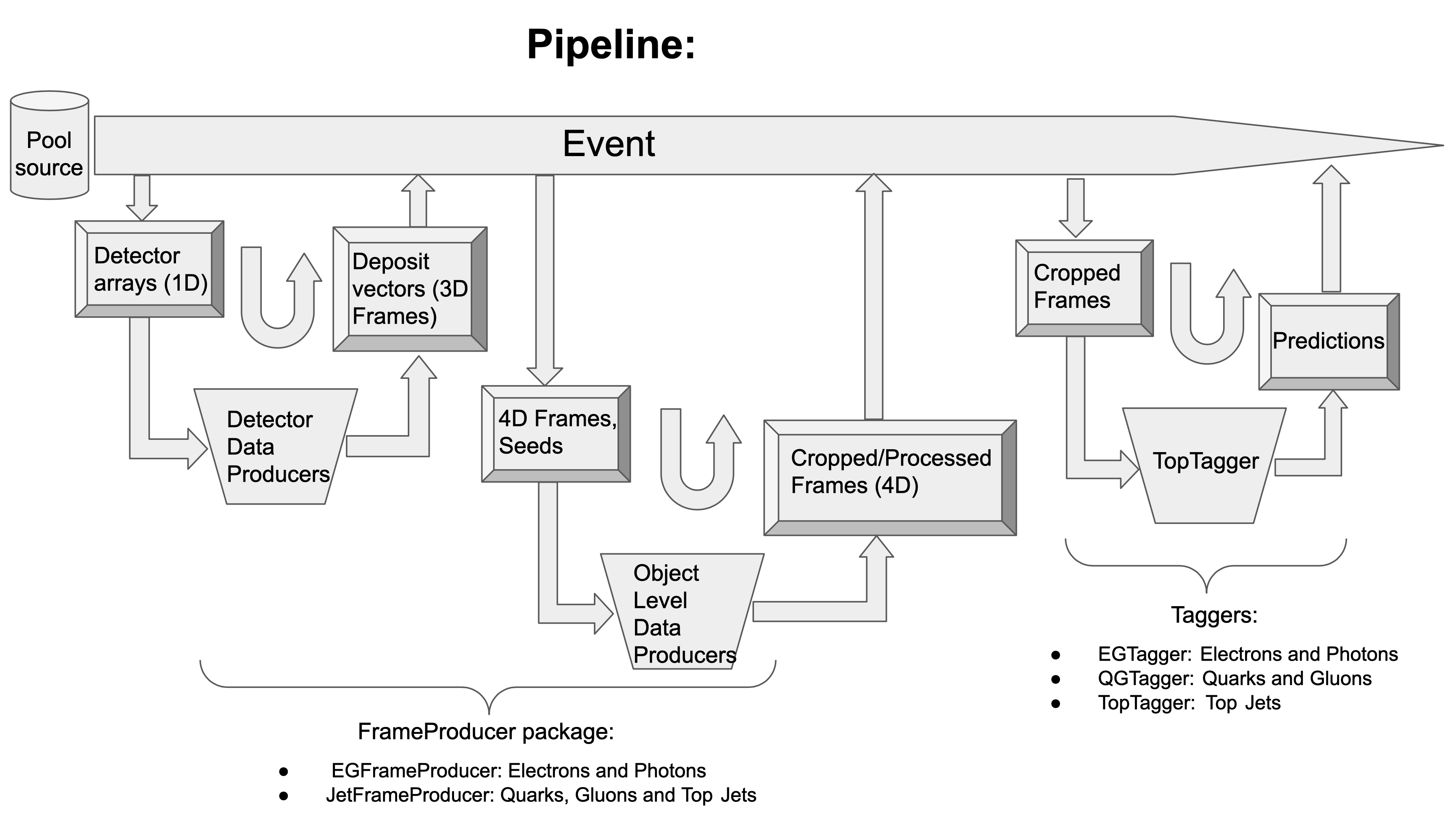

Data flow in the E2E project:

Before running the inference on the particles (electron/photon, quark/gluon, top quarks, etc. ), the data from the edm file needs to be preprocessed. This involves reading the data from the edm root file, storing the extracted detector image arrays directly to the edm root file (which can be used directly for analysis and processing such as running the inference, etc.), reading seed coordinates from the edm file and cropping the detector image arrays into 2-dimensional frames centered around the extracted seed coordinates, running the inference on the cropped frames and storing the predictions back to the edm production file. The following is the representation of the flow of data:

Reading Detector Images and Storing the extracted

Image vectors to EDM ROOT File:

The EDProducer- DetImgProducer performs the process of reading Object Collections like ‘reducedEcalRecHitsEB’, ‘gedPhotons’, ‘reducedHcalRecHits:hbhereco’, etc. which are required for processing and running the inference. The extracted arrays are stored in the edm root file as flat 1-Dimensional vectors (like EcalRecHitsEB, HBHE, stitched ECAL, Tracks at ECAL stitched). These flattened vectors of Detector Images can be used directly for further processing and inference.

Extracting Jet seed coordinates:

In order to crop the frames from the detector image arrays, first the centers of the frames need to be determined. The frames are centered around the jet seed coordinates present in the Jet collections (for example ‘gedPhotons’ for photon seeds ) for the respective particles. This task is performed by EDProducers- EGProducer and QGProducer for photon and quark/gluon respectively. The jet seed coordinates are selected on the basis of certain criteria such as those seeds whose eta coordinate is at the corner of the detector images are neglected, as well as the seeds having energies less than zero are neglected too.

Preparing the frames for inference:

After determining the centers, the frame of required sizes are cropped from the Detector image vectors that were stored by DetImgProducer in the edm file. For photons, the cropped frame size is set to 32x32 and for quark and gluons, the size is 125x125. The cropping function is performed by EGProducer and QGProducer for their respective particles. For HBHE frames, they are first upsampled (for converting HB tower coordinates to EB crystal coordinates) and necessary striding are performed before cropping the frames and feeding them to the inference.

Running the Inference and storing the predictions:

The inference of a trained model is run using the Tensorflow C++ API present in the CMSSW Framework. The tensorflow model trained in python should be stored in protobuf (.pb) format. The name of the protobuf file can be passed as a parameter in the EDProducers while calling the predict_tf function for running the inference and making the predictions. However, to avoid any errors, some care has to be taken while preparing the protobuf models in python. The python API of Tensorflow is actively updated unlike C++ Tensorflow leading to some issues of version incompatibility while trying to run some tensorflow models trained in python. To avoid such issues it is advisable to save the tensorflow models in python using ‘Tensorflow 1.6.0’ or a lesser version. This will ensure the Ops used while creating the protobuf files are compatible with the Tensorflow Graph interpreter of CMSSW Framework. If Keras is used, the version recommended is ‘Keras 2.1.5’.

After the inference is run successfully, the predictions are stored in the edm root file.

Configuration Files in CMSSW:

All CMS code is run by passing a config file (_cfg.py) to CMSSW executable cmsRun. A configuration file defines which modules are loaded, the order in which they are run, and the configurable parameters that are used to run the modules. Configurations are written using the Python Language. The python folder of the repository submitted contains all the configuration files for the EDProducers.

Executing the Code

The configuration options to run the whole process including all the EDProducers is present in E2eDLrec_cfg.py.

The commands to execute the configuration files and hence, the EDProducers (in E2eDLrec) are :

$ git clone https://github.com/Shra1-25/E2eDL.git

$ scram b

$ cmsRun E2eDL/E2eDLrec/python/E2eDLrec_cfg.py \

> inputFiles=file:input_root_file_location/filename.root \

> maxEvents=100 \

> EGModelName=e_vs_ph_model.pb \

> QGModelName=ResNet.pb \

> TopQuarksModelName=ResNet.pb

Before running the command cmsRun, please ensure that the TensorFlow models (protobuf - '.pb' files) are present in the E2eDL folder along with E2eDLrec and E2eDLrecClasses. For sample ResNet.pb file is present there.

Model Architectures

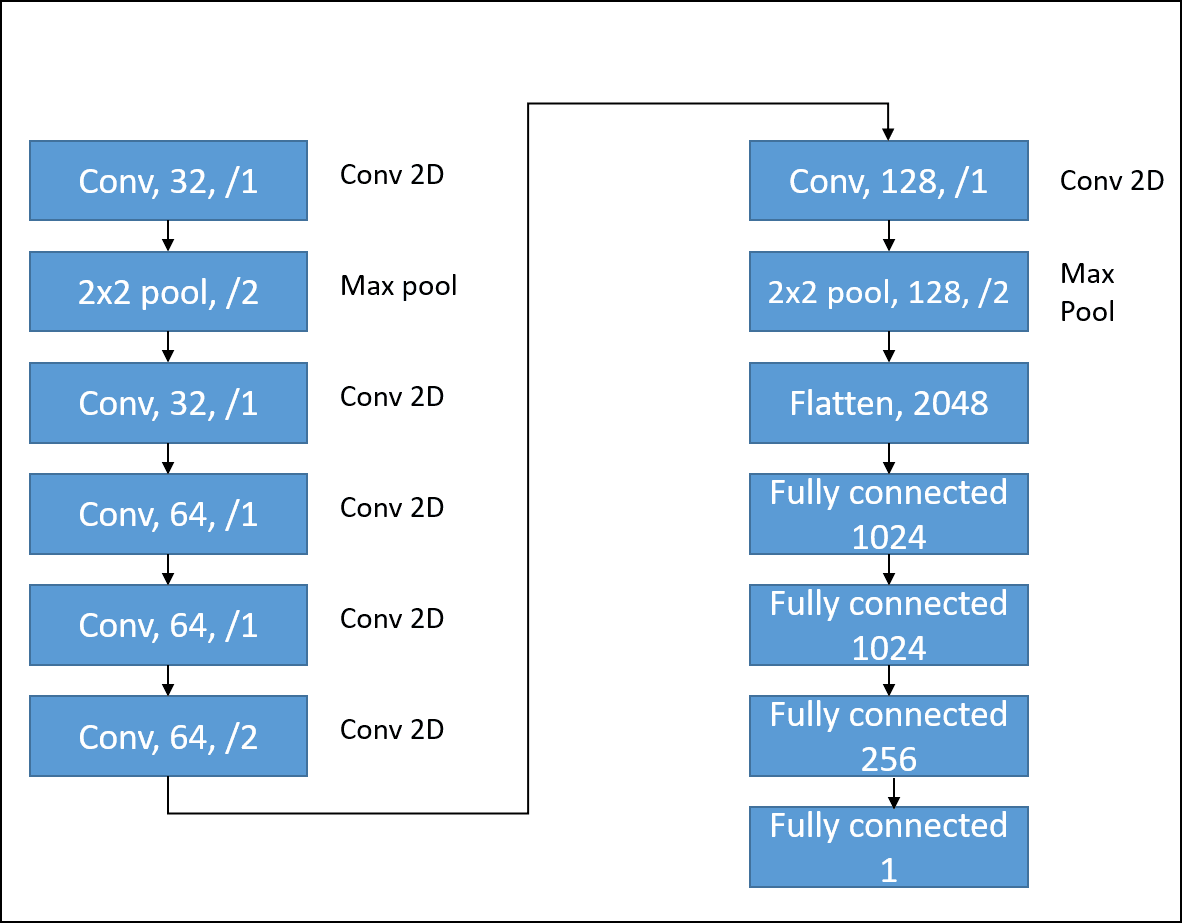

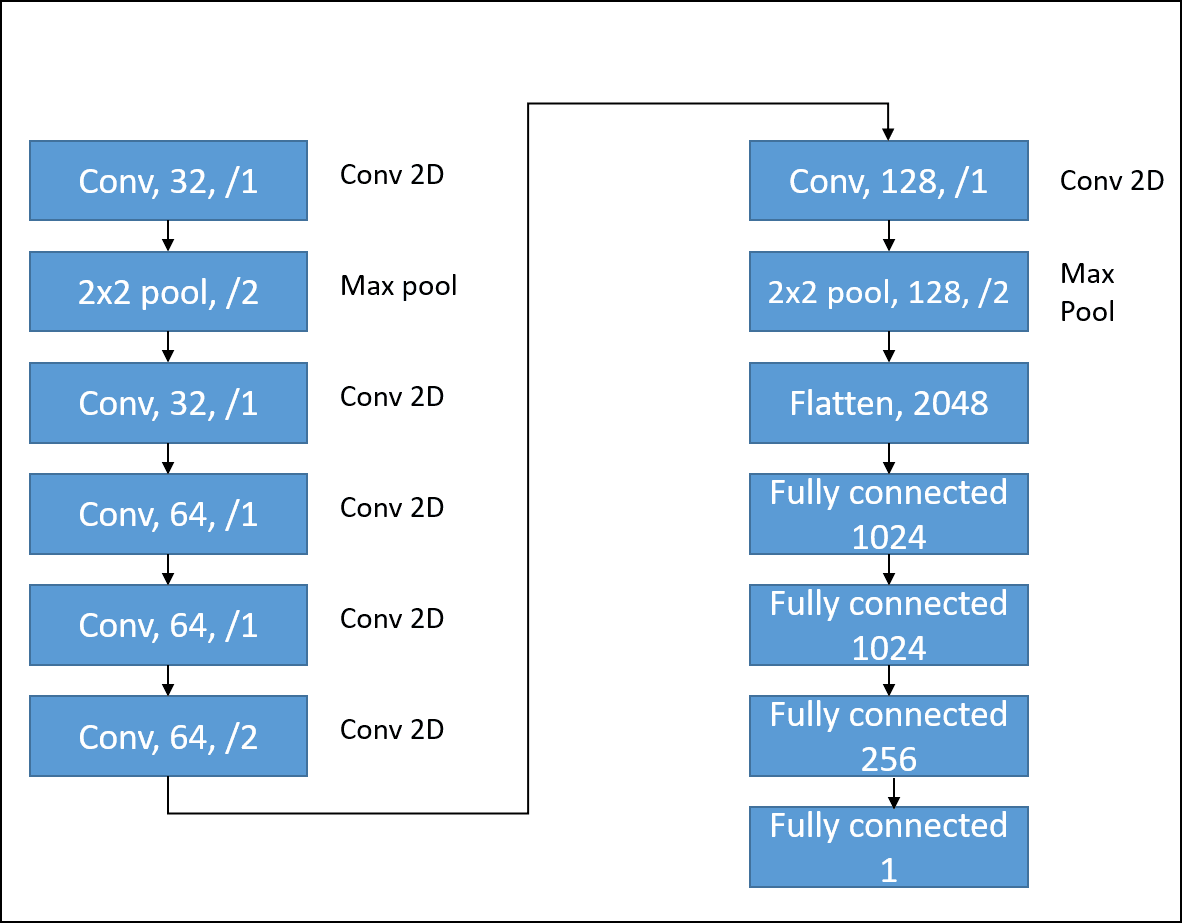

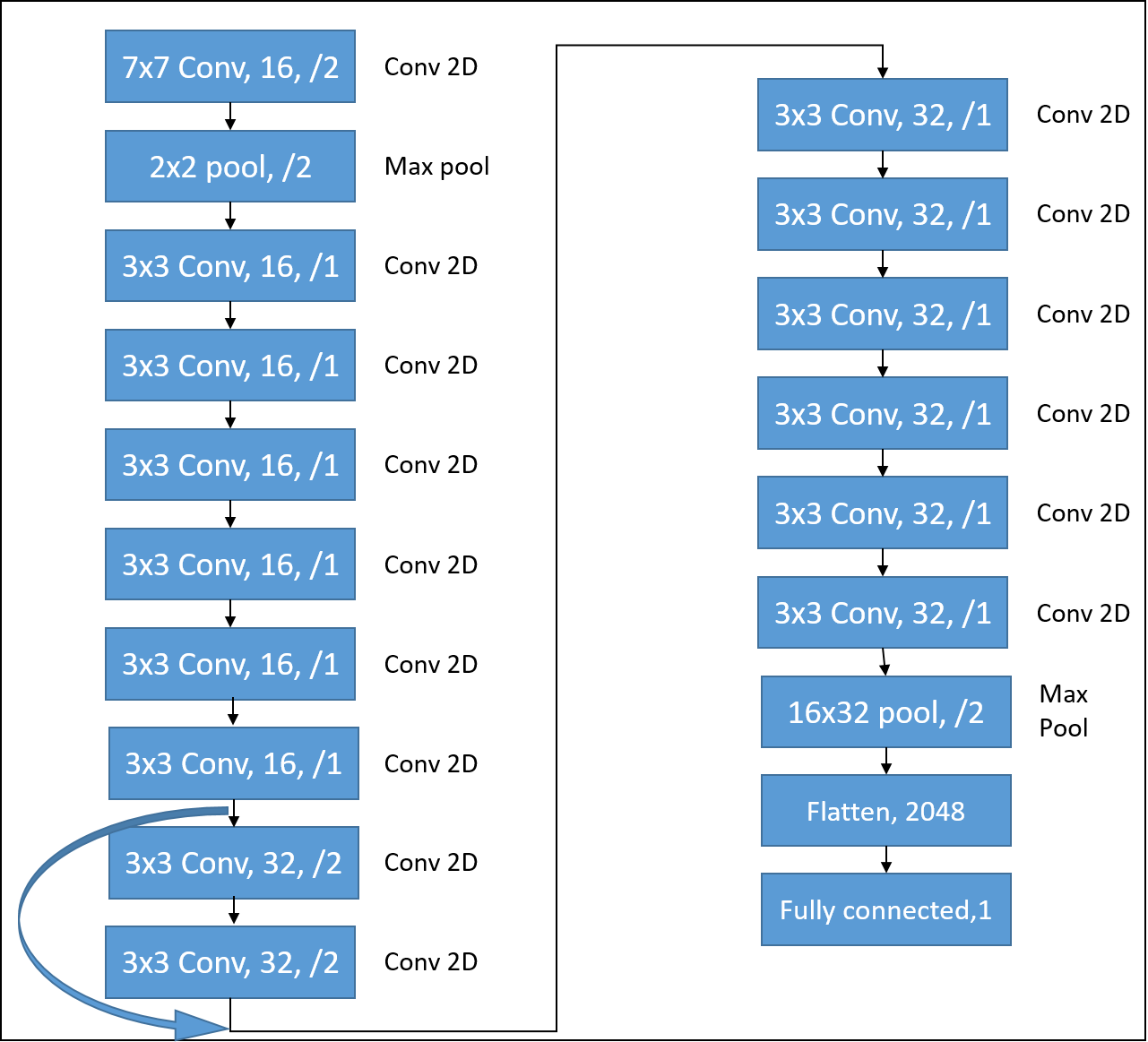

Following is the Model Architecture for Electron vs Photon inferences. It is a slight variant of VGG:

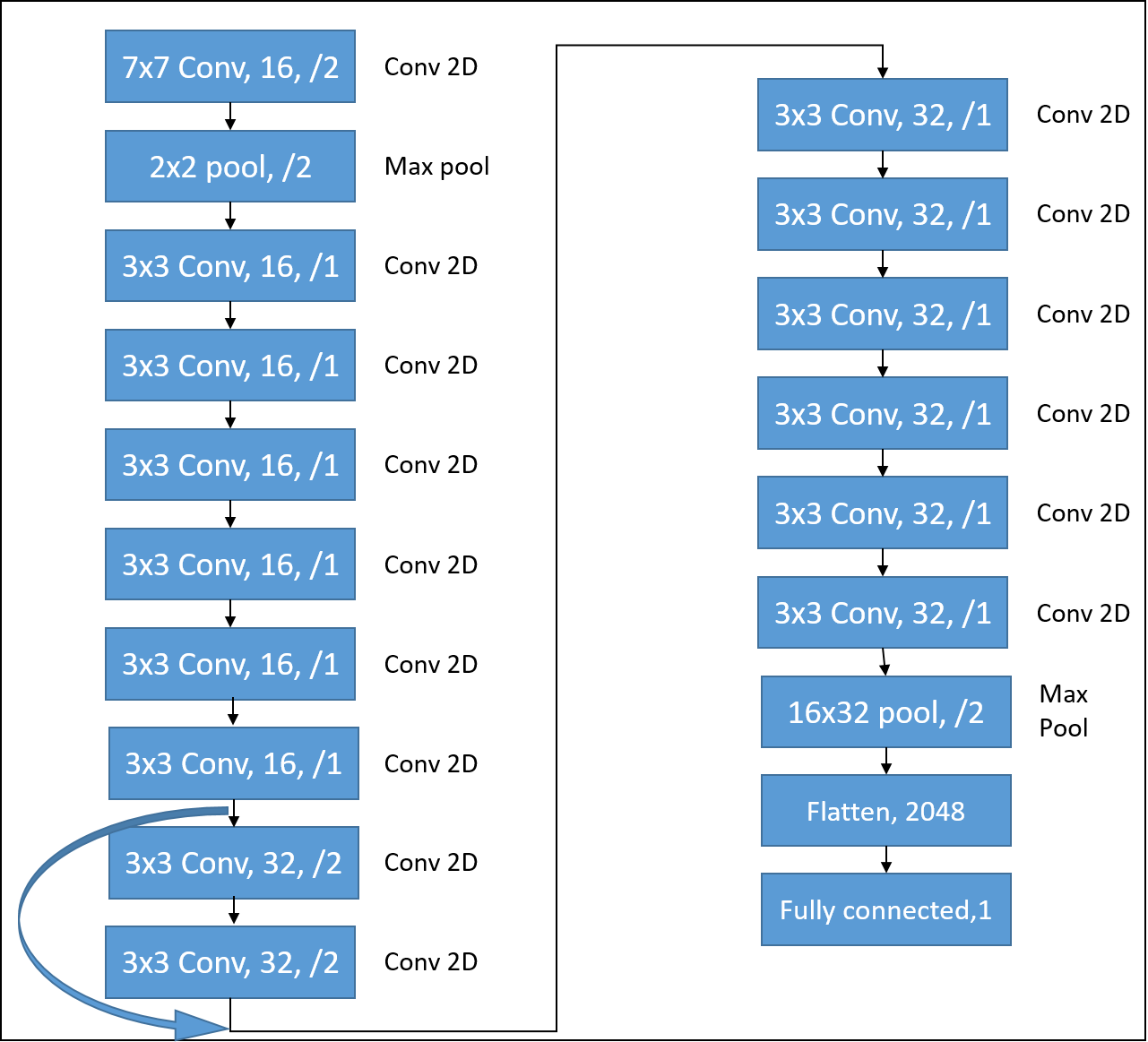

Following is the Model Architecture for Quark vs Gluon inferences and for Top Quarks. It is inspired from ResNet Architecture:

Inferences

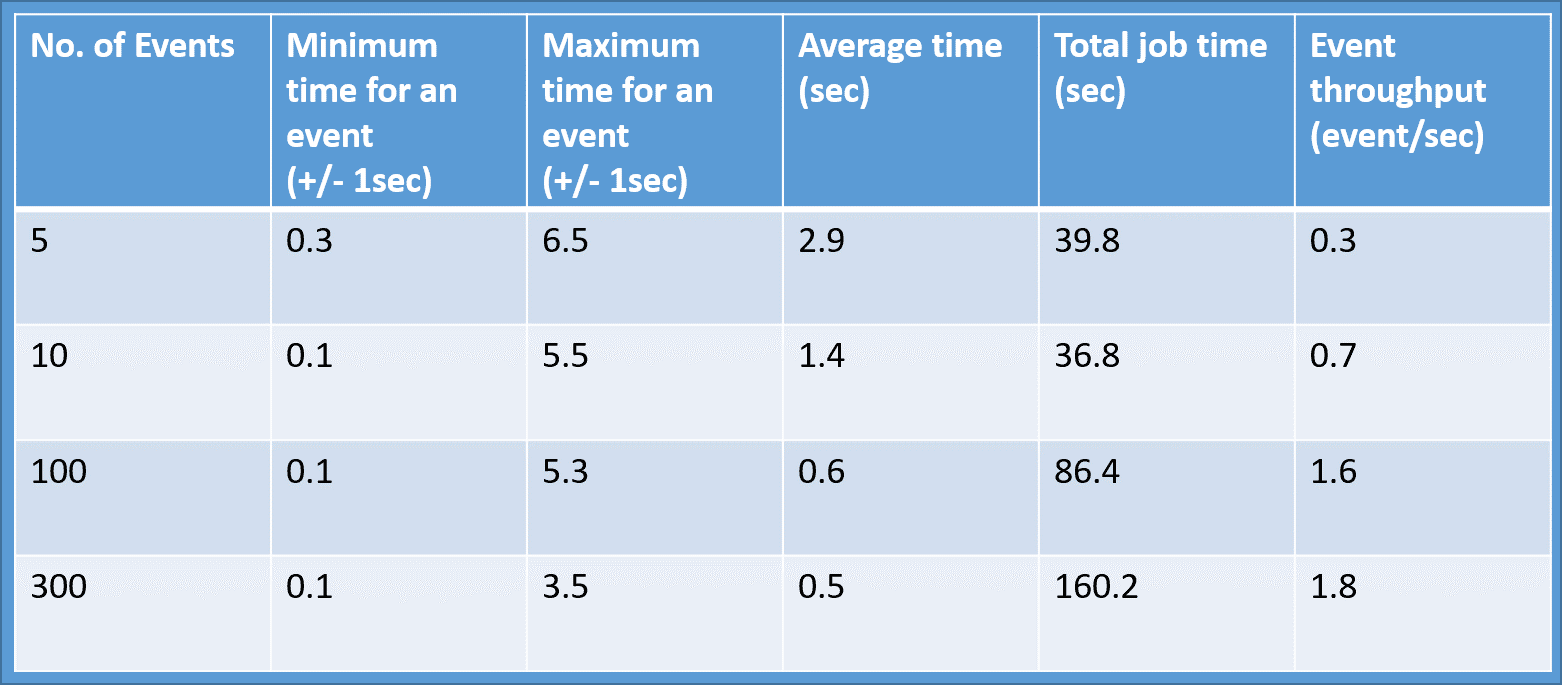

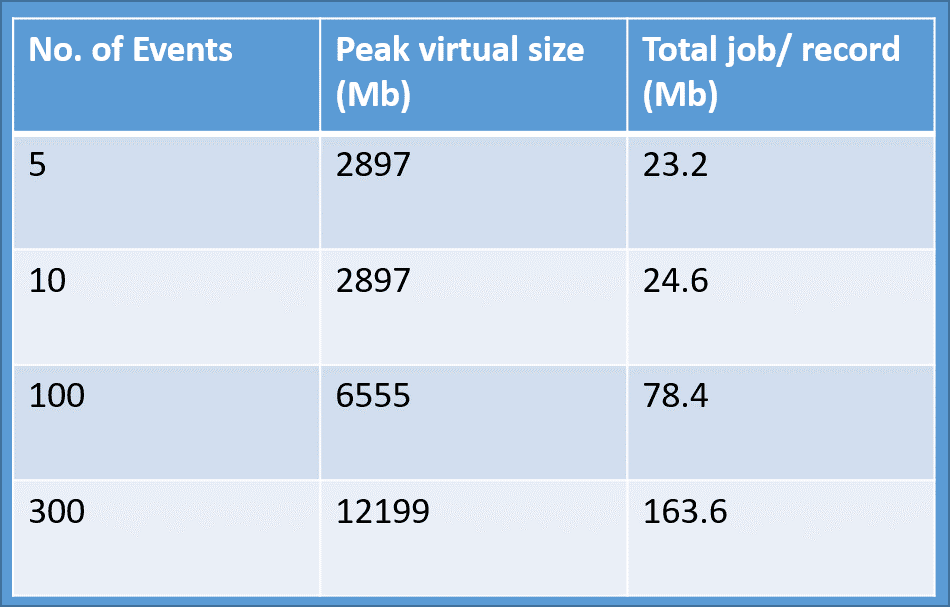

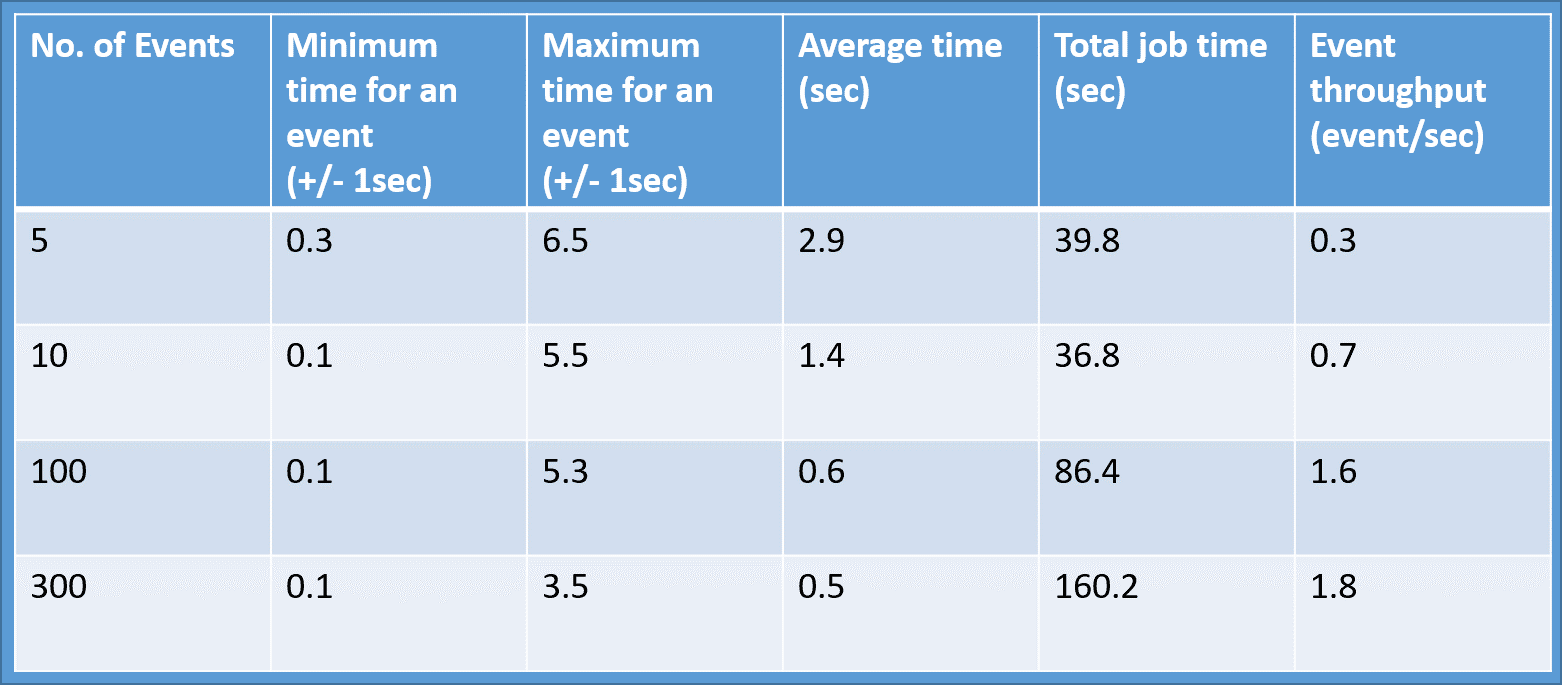

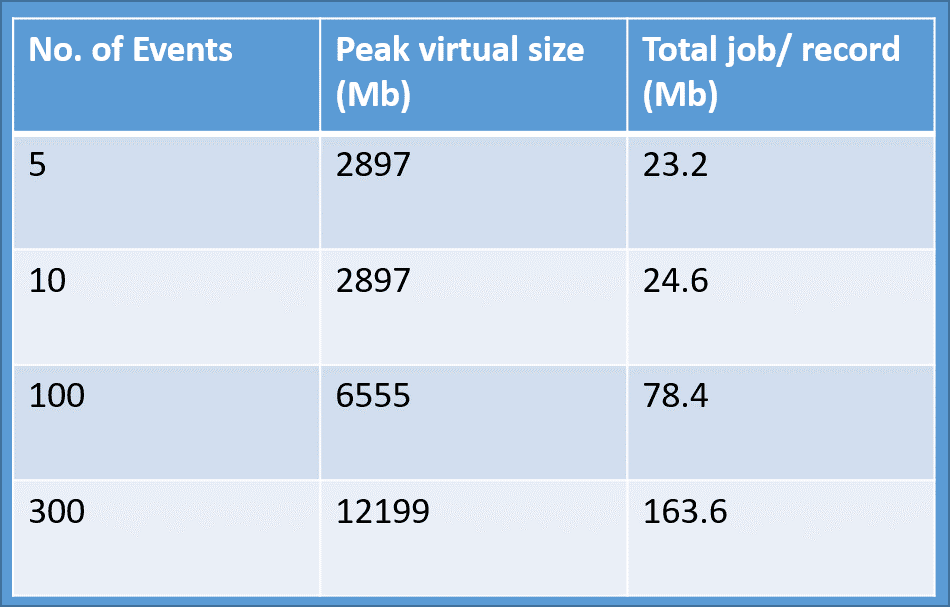

The models used for the following timing and memory checks are e_vs_ph_model and ResNet.

Inference Timing results (on a CPU):

Inference Memory results:

Acknowledgements

I am very grateful and indebted to my mentors Sergei Gleyzer (CERN,FermiLab), Michael Andrews (CERN,CMU), Emanuele Usuai (CERN,Brown) and Darya Dyachkova (CERN, Minerva Schools) for being available to my doubts and queries all the time. I am grateful to the whole E2E team for providing me an invaluable learning experience during the Google Summer Of Code 2020 period. Their help has been extremely useful to me. I am also grateful to CERN-HSF and Google for this wonderful opportunity.

Contact

Elements

Text

This is bold and this is strong. This is italic and this is emphasized.

This is superscript text and this is subscript text.

This is underlined and this is code: for (;;) { ... }. Finally, this is a link.

Heading Level 2

Heading Level 3

Heading Level 4

Heading Level 5

Heading Level 6

Blockquote

Fringilla nisl. Donec accumsan interdum nisi, quis tincidunt felis sagittis eget tempus euismod. Vestibulum ante ipsum primis in faucibus vestibulum. Blandit adipiscing eu felis iaculis volutpat ac adipiscing accumsan faucibus. Vestibulum ante ipsum primis in faucibus lorem ipsum dolor sit amet nullam adipiscing eu felis.

Preformatted

i = 0;

while (!deck.isInOrder()) {

print 'Iteration ' + i;

deck.shuffle();

i++;

}

print 'It took ' + i + ' iterations to sort the deck.';

Lists

Unordered

- Dolor pulvinar etiam.

- Sagittis adipiscing.

- Felis enim feugiat.

Alternate

- Dolor pulvinar etiam.

- Sagittis adipiscing.

- Felis enim feugiat.

Ordered

- Dolor pulvinar etiam.

- Etiam vel felis viverra.

- Felis enim feugiat.

- Dolor pulvinar etiam.

- Etiam vel felis lorem.

- Felis enim et feugiat.

Icons

Actions

Table

Default

| Name |

Description |

Price |

| Item One |

Ante turpis integer aliquet porttitor. |

29.99 |

| Item Two |

Vis ac commodo adipiscing arcu aliquet. |

19.99 |

| Item Three |

Morbi faucibus arcu accumsan lorem. |

29.99 |

| Item Four |

Vitae integer tempus condimentum. |

19.99 |

| Item Five |

Ante turpis integer aliquet porttitor. |

29.99 |

|

100.00 |

Alternate

| Name |

Description |

Price |

| Item One |

Ante turpis integer aliquet porttitor. |

29.99 |

| Item Two |

Vis ac commodo adipiscing arcu aliquet. |

19.99 |

| Item Three |

Morbi faucibus arcu accumsan lorem. |

29.99 |

| Item Four |

Vitae integer tempus condimentum. |

19.99 |

| Item Five |

Ante turpis integer aliquet porttitor. |

29.99 |

|

100.00 |